Using Unsupervised Machine Learning to Tag Blog Posts

I’m always thinking of ways to improve my blog. Since July 2024, I’ve written 38 posts and just shy of 40,000 words. This post is the 39th. As the number of posts on my blog has increased, I’ve begun thinking about how to make its content more accessible to readers. To that end, I recently implemented thematic tags to connect posts on similar subjects. This is in contrast to the three categories already implemented; while the categories broadly define the purpose of each article, tags serve a more fine-grained topical or thematic connection between posts.

To explore the kind and number of tags to use, I employed unsupervised machine learning with Python and created a dashboard to analyze the results. Seven tags balancing post count and content relevance were identified. This post describes the implementation of the machine learning algorithm and discusses my findings.

Overview

I used the Python library scikit-learn to implement the machine learning model. scikit-learn is one of the most popular data science libraries for Python, used by the likes of Spotify for their recommendation algorithms. The goal of this project was to explore different ways to cluster my articles based on the content of each article. After grouping the articles, I could choose tags for each article based on the keywords of each cluster and my knowledge of the topic of each article. With this goal in mind, I needed to create a set of article clusters, show the top keywords for each cluster, and list the articles that belong in each cluster.

Several important technical and practical considerations were made. First, choice of machine learning model and processing pipeline were considered. Because the goal of the project was exploratory rather than predictive, unsupervised machine learning models were necessary. That meant choosing classifiers such as k-means or SVM. Second, because the data involved raw text, natural language processing (NLP) would be required.

In order to classify raw text and discover the relative importance of different keywords across the corpus of my blog articles, I calculated TF-IDF scores for each word. TF-IDF measures the importance of a word across a corpus by measuring how often a word appears in a particular document compared to how often the word appears in general across all the documents in the corpus.

Methods

The process for this machine learning project consisted of data preprocessing and cleaning, model selection and evaluation, and analysis and deployment.

The data for my corpus was stored in Markdoc files, a type of text file with a structured metadata section at the top and a simple markup in the body of the document. To process each document, I needed to extract relevant information from each post’s metadata and strip the rest from the document. I also needed to find and remove certain markup elements from the text bodies, such as image references and special tags. Removing these would prevent certain terms from polluting the TF-IDF measurements.

For example, Markdoc image references take the form . Words from the file path such as jpg and image don’t add useful word frequency information, so I used regular expressions to find and remove such markup. Other examples include custom Markdoc tags which I created for the website to add custom functionality. These tags take the form {% tag_name attribute="some value" %}. Removing these from the corpus would allow the machine learning model to focus more on the actual content.

To convert the raw text into numerical vectors suitable for machine learning, scikit-learn’s TfidfVectorizer was used. Some hyper-parameters were adjusted for the vectorizer. First, scikit-learn’s built-in English stop-word list was used to remove common English words such as “the.” The minimum document frequency was set to two so that terms unique to just one document wouldn’t have an excessive impact on clustering. N-grams of up to two words long were also factored into the vectorization process. Considering the relatively small size of the corpus, few n-grams were expected, but some word combinations, such as “machine learning,” would certainly connect posts topically.

During the model selection phase, the k-means classification algorithm was selected for its speed and simplicity. The algorithm ensures that no data points go unclassified. Most hyper-parameters of the model were left at their defaults. Different k values were explored for the clearest clustering based on the silhouette score, which describes the distance of each data point from the nearest cluster to which it doesn’t belong. Silhouette scores range from -1 to 1, with positive values indicating better classification results. For k, values between two and ten were considered.

After model selection was complete, a dashboard was created to display the results and facilitate analysis. This dashboard was built into a private section of the blog website being analyzed. To facilitate data transfer between the model and the front end, a simple Python CLI was created to allow for tuning and data export to JSON, a common web-based data format.

Results and discussion

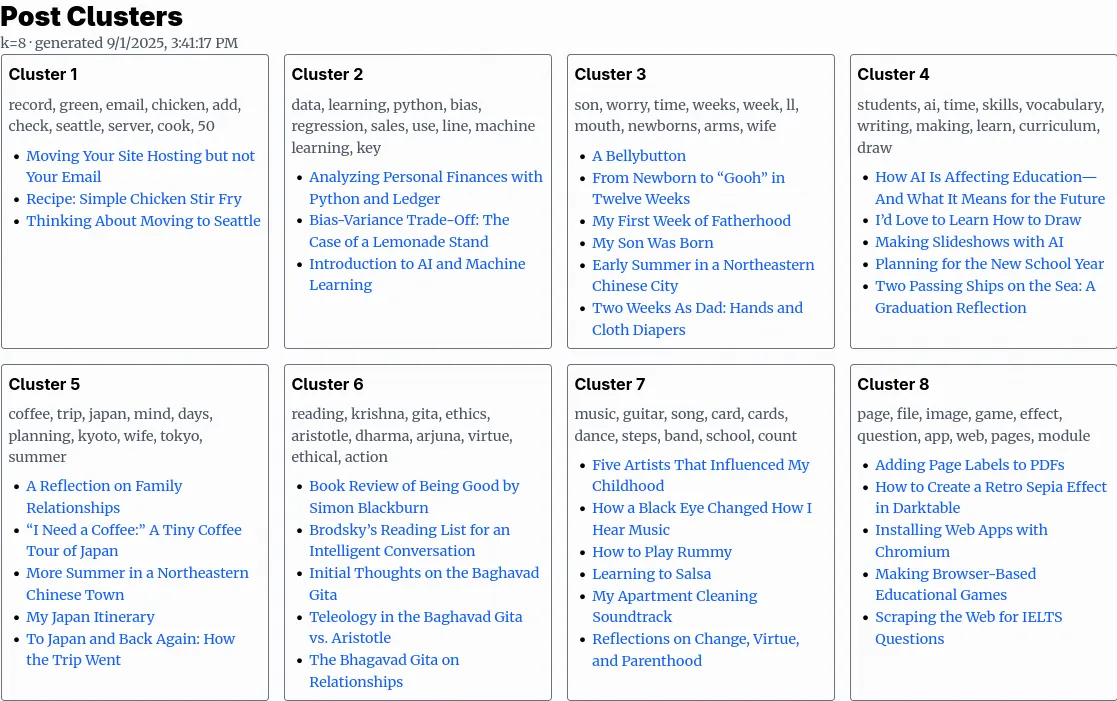

The classification model produced nine different clusters, each with an average of four articles per cluster. Further exploration yielded better results with k = 6 or 8. The results are summarized in the table below.

The dashboard showing the post clusters for k = 8

| Cluster | Theme/Content Type | Outliers | Top Keywords |

|---|---|---|---|

| 1 | Technology / How-to | 1 | record, green, email, chicken, add, check, seattle, server, cook, 50 |

| 2 | Technology / How-to | 0 | data, learning, python, bias, regression, sales, use, line, machine learning, key |

| 3 | Family | 1 | son, worry, time, weeks, week, ll, mouth, newborns, arms, wife |

| 4 | Education | 0 | students, ai, time, skills, vocabulary, writing, making, learn, curriculum, draw |

| 5 | Travel | 1 | coffee, trip, japan, mind, days, planning, kyoto, wife, tokyo, summer |

| 6 | Philosophy | 0 | reading, krishna, gita, ethics, aristotle, dharma, arjuna, virtue, ethical, action |

| 7 | Music / Art | 1 | music, guitar, song, card, cards, dance, steps, band, school, count |

| 8 | Technology / How-to | 0 | page, file, image, game, effect, question, app, web, pages, module |

One of the limitations of this machine learning project was the small corpus size. With only 38 articles, connections between posts were limited. Some posts, for example my recent chicken stir fry recipe or my article expressing my desire to learn to draw, have little connection to other post topics on the site. While more similar posts may be added in the future, tagging them currently remains difficult.

Second, the unsupervised learning process necessarily involves a subjective element. It’s difficult to say whether one particular result is conclusively better than another. For that reason, results from multiple configurations of the model were reviewed during the model tuning process. The main criterion of quality was how well the model’s clustering gave insight into the data.

Lastly, only the k-means model was evaluated. A more complex project would benefit from a more thorough evaluation process including other models such as SVM or DBSCAN. In addition, using a model such as DBSCAN would potentially catch outliers such as the stir fry recipe article. However, exploring one model was sufficient to meet the goals of the current project based on the size of its data set and the scope of its goals.

Conclusion and next steps

This article has shown how unsupervised machine learning can be used to group blog posts for tag selection and analysis, even for a relatively small data set. A variety of clusters counts were evaluated, using the k value provided by a maximum silhouette score as a starting point, with k = 8 being a reasonable final value. From the results, the tags “family,” “tech/programming,” “how-to,” “education,” “travel,” “philosophy,” and “music/art” were identified as good candidates achieving a good balance of specificity and relevance.

Many possibilities remain unexplored for integrating machine learning into this website. For example, the clusters in this project could be used to create a smarter list of related post recommendations at the end of each post. As the number of posts continues to grow, a supervised model could be applied to assign tags to each new post. This could even be integrated into the content management system to streamline the content editing workflow.

As the site continues to grow, other data sources can be integrated into the machine learning process. For example, web analytics data such as site traffic can be harnessed to target the features and topics most appreciated by the readership. This would improve the site’s search engine optimization and reveal new growth opportunities.